Architecture

Logisland has an event driven architecture that handle all the hard boilerplate code to provide a scalable stream processing framework.

Core concepts

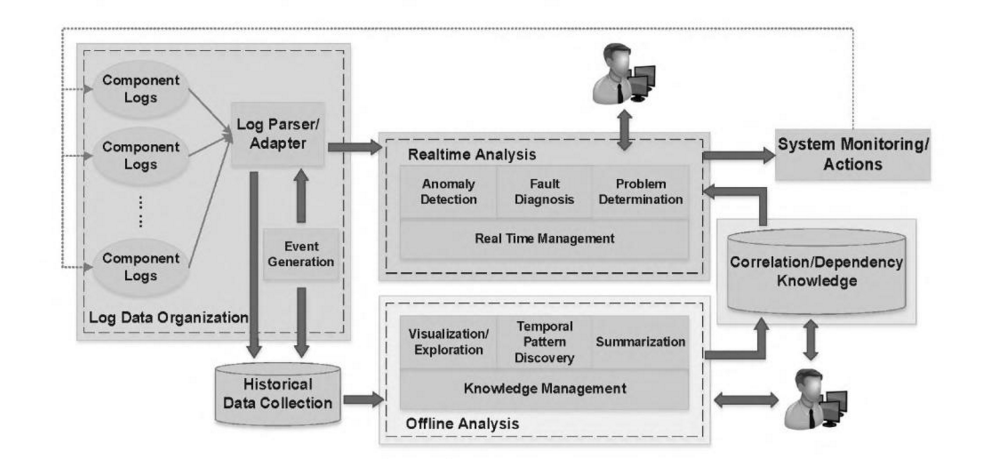

The main goal of Logisland framework is to provide tools to automatically extract valuable knowledge from historical log data. To do so we need two different kind of processing over our technical stack :

-

Grab events from logs

-

Perform Event Pattern Mining (EPM)

What we know about Log/Event properties :

-

they’re naturally temporal

-

they carry a global type (user request, error, operation, system failure…)

-

they’re semi-structured

-

they’re produced by software, so we can deduce some templates from them

-

some of them are correlated

-

some of them are frequent (or rare)

-

some of them are monotonic

-

some of them are of great interest for system operators

What is a pattern ?

Patterns, actually are a set of items subsequences or substructures that occur frequently together in a data set we call this strongly correlated. Patterns usually represent intrinsic and important properties of data.

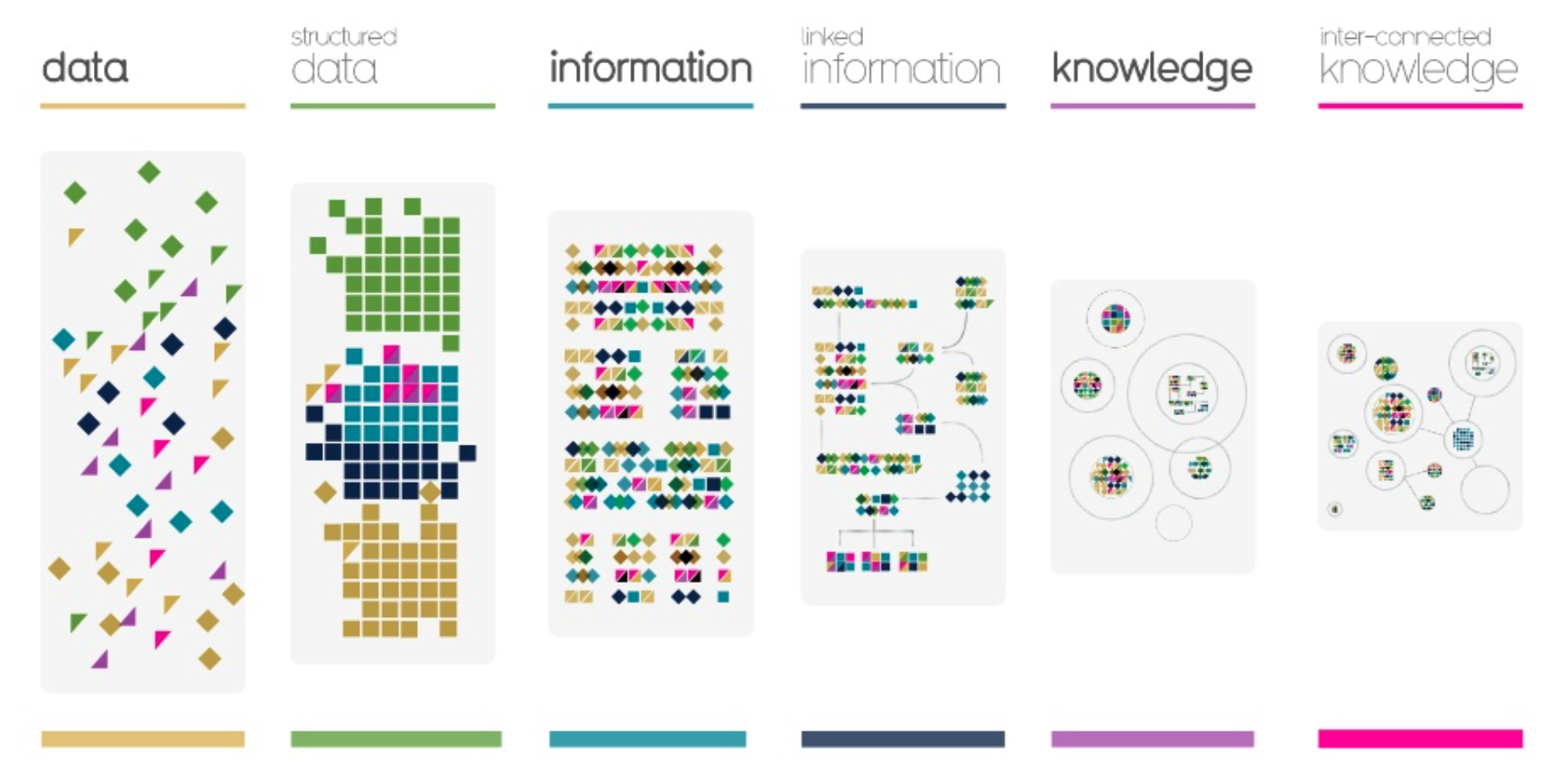

From raw to structure

The first part of the process is to extract semantics from semi-structured data such as logs.

The main objective of this phase is to introduce a canonical semantics in log data that we will call Event which will be easier for us to process with data mining algorithm

-

log parser

-

log classification/clustering

-

event generation

-

event summarization

Event pattern mining

Once we have a cannonical semantic in the form of events we can perform time window processing over our events set. All the algorithms we can run on it will help us to find some of the following properties :

-

sequential patterns

-

events burst

-

frequent pattern

-

rare event

-

highly correlated events

-

correlation between time series & events

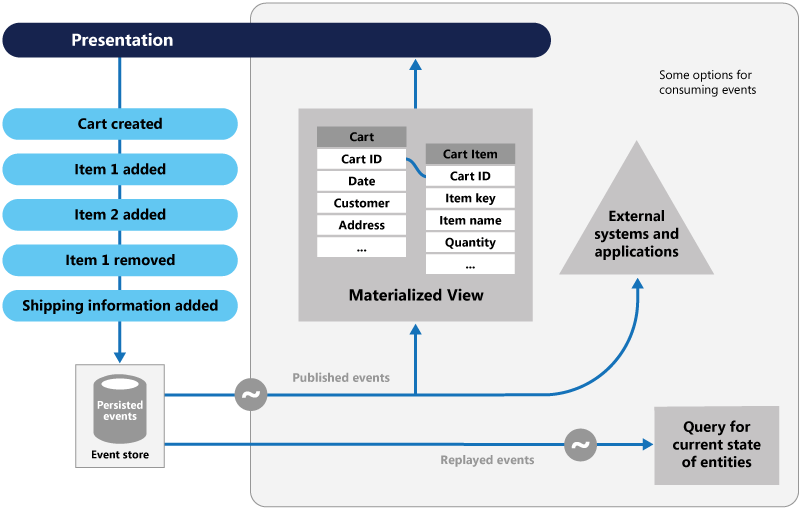

Core Philosophy : Event sourcing

Most of the systems in this data world can be observables through their events.

You just have to look at the event sourcing pattern to get an idea of how we could define any system state as a sequence of temporal events. The core nature of events can be very different from one organisation to another but consists mainly in system/application logs, sensor data, user click streams, etc. Large and complex systems, made of number of heterogeneous components are not easy to monitor, especially when have to deal with distributed computing. Most of the time of IT resources is spent in maintenance tasks, so there’s a real need for tools to help achieving them.

Use an append-only store to record the full series of events that describe actions taken on data in a domain, rather than storing just the current state, so that the store can be used to materialize the domain objects. This pattern can simplify tasks in complex domains by avoiding the requirement to synchronize the data model and the business domain; improve performance, scalability, and responsiveness; provide consistency for transactional data; and maintain full audit trails and history that may enable compensating actions.

Most applications work with data, and the typical approach is for the application to maintain the current state of the data by updating it as users work with the data. For example, in the traditional create, read, update, and delete (CRUD) model a typical data process will be to read data from the store, make some modifications to it, and update the current state of the data with the new values—often by using transactions that lock the data. The Event Sourcing pattern defines an approach to handling operations on data that is driven by a sequence of events, each of which is recorded in an append-only store. Application code sends a series of events that imperatively describe each action that has occurred on the data to the event store, where they are persisted. Each event represents a set of changes to the data (such as AddedItemToOrder).

|

Tip

|

Logisland will help us to handle replayable events from any kind of source due to its event sourcing core philosophy. |

Data driven architecture

Technical design

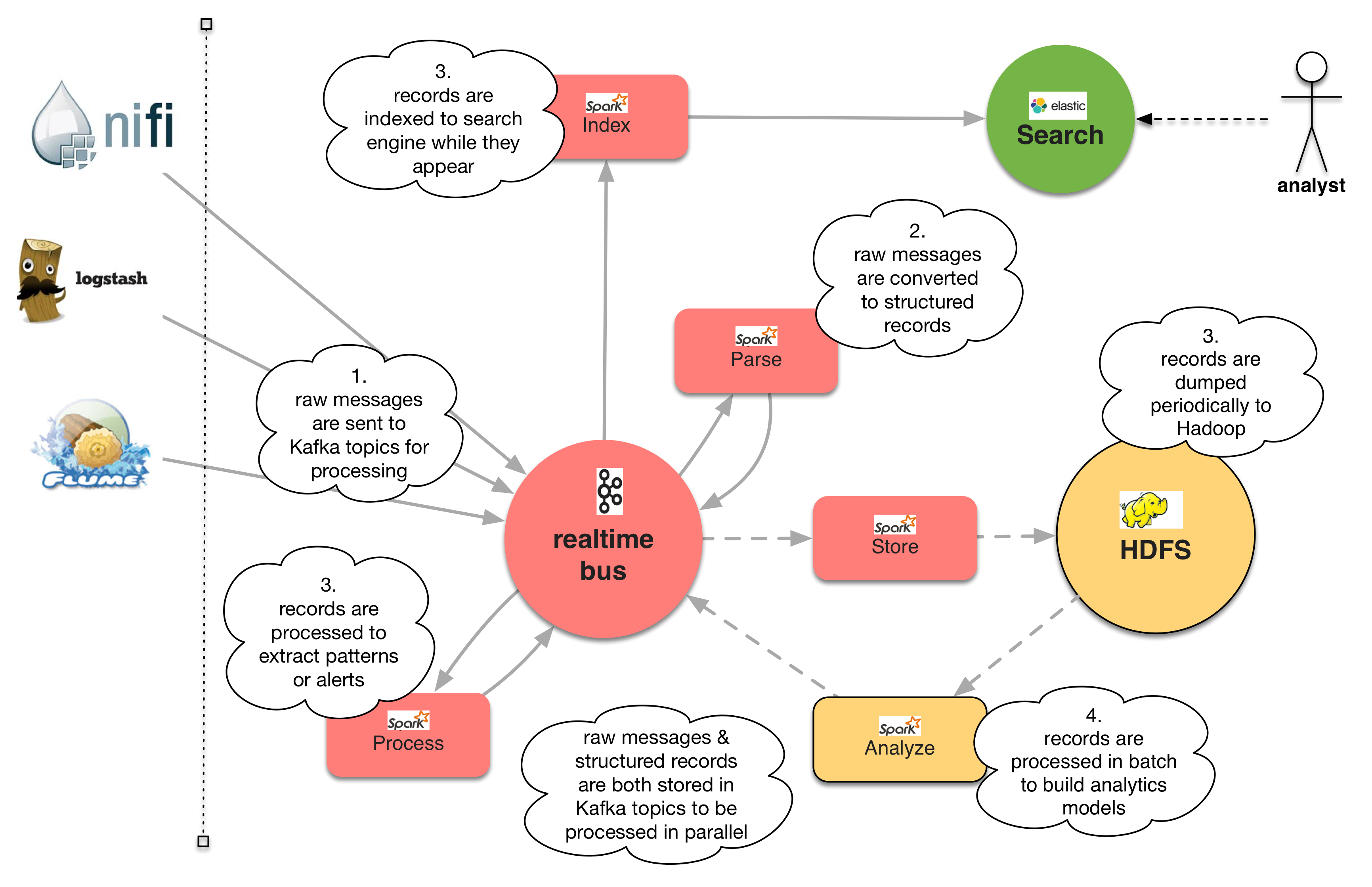

Logisland is an event processing framework based on Kafka and Spark. The main goal of this Open Source platform is to abstract the level of complexity of complex event processing at scale. Of course many people start with an ELK stack, which is really great but not enough to elaborate a really complete system monitoring tool. So with Logisland, you’ll move the log processing burden to a powerful distributed stack.

Kafka acts a the distributed message queue middleware while Spark is the core of the distributed processing. Logisland glue those technologies to simplify log complex event processing at scale.